CLOUDFLARE

Cloudflare's Outages: Production Testing Risks

Cloudflare's recent outages highlight the dangers of deploying infrastructure changes directly to production environments without thorough prior testing.

- Read time

- 4 min read

- Word count

- 813 words

- Date

- Dec 28, 2025

Summarize with AI

Cloudflare's recent system disruptions underscore critical issues with their deployment strategies, particularly the practice of testing changes directly in production. This approach, which bypasses established staging environments, has led to significant service failures. The incidents involving a React Server Components update and a new Rust-based proxy illustrate a recurring pattern of insufficient validation before live deployment. These events serve as a stark reminder for all organizations about the imperative of rigorous testing protocols to prevent widespread service interruptions and maintain customer trust.

🌟 Non-members read here

Cloudflare’s Recurring Outages Raise Questions on Deployment Practices

Recent service disruptions at Cloudflare have brought into sharp focus the critical importance of rigorous testing protocols for network infrastructure changes. Industry standards dictate that any modifications to a live production network should first be thoroughly validated in a staging environment. This dedicated replica mirrors the production setup, allowing for comprehensive testing without risking live service.

Developers typically work within isolated testing and development environments, which serve as pre-staging phases for new and experimental changes. This layered approach is designed to catch potential issues before they impact end-users. However, Cloudflare’s recent incidents suggest a deviation from these time-tested methodologies, raising concerns about their deployment strategies.

The company’s approach to implementing changes appears to bypass these crucial preliminary stages. This practice, often referred to as “testing on production,” carries inherent risks that have manifested in recent service interruptions. The implications extend beyond Cloudflare, serving as a cautionary tale for any organization considering similar shortcuts in their infrastructure management.

The December 5th Outage: A Case Study in Production Deployment Risks

Cloudflare’s post-mortem report on a December 5th outage detailed a sequence of events that began with a direct rollout of a change to React Server Components (RSC). The objective was to implement a 1 MB buffer, a measure intended to address a critical vulnerability, CVE-2025-55182, within RSC. Instead of a gradual, phased deployment validated in a staging environment, this change was initiated directly on the production network.

During this live rollout, a critical flaw was uncovered: a testing tool used by Cloudflare did not support the newly increased buffer size. This discovery prompted an immediate decision to globally disable the testing tool, circumventing the established gradual rollout mechanism. The unexpected interaction between the new RSC change and the incompatible testing tool created an unforeseen vulnerability.

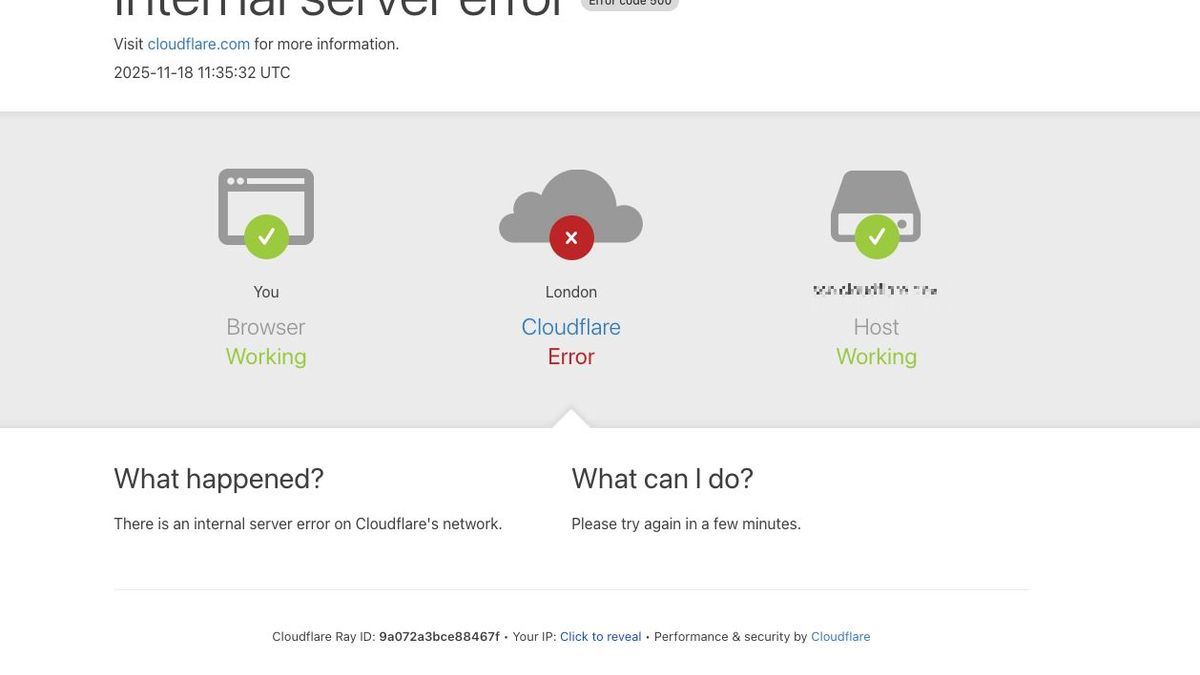

This abrupt disabling action had immediate and severe consequences. It introduced a null value into the original Lua-based FL1 proxy system, triggering widespread HTTP 500 errors for affected requests. While the issue was identified and rectified relatively quickly compared to previous outages, it highlighted a significant gap in Cloudflare’s pre-deployment validation processes. The incident underscored the dangers of making fundamental infrastructure changes directly on a live system without comprehensive pre-production testing.

Systemic Challenges and Previous Incidents

The December 5th incident is not an isolated event but rather indicative of broader systemic challenges within Cloudflare’s deployment framework. A previous major disruption occurred when their new, Rust-based FL2 proxy failed upon encountering a corrupted input file. In that instance, the newly introduced proxy system proved unable to gracefully handle unexpected data, leading to another service interruption. This earlier event also pointed to insufficient testing and validation before a critical new component was integrated into the live network.

The recurring pattern of issues surfacing directly in production environments suggests a fundamental disconnect from standard system administration best practices. These practices emphasize meticulous testing, rigorous quality assurance, and a phased deployment approach that minimizes risk to operational services. Bypassing these steps, even for seemingly minor updates, can have cascading effects across complex network infrastructures.

The outages serve as a stark warning to all organizations about the potential fallout of neglecting thorough pre-production validation. While internal pressures or a desire for rapid deployment might push companies toward “quick deployments on production,” the long-term costs of customer dissatisfaction and service disruption far outweigh any perceived short-term benefits. The incidents at Cloudflare highlight that even major technology providers are not immune to the consequences of inadequate testing.

The Importance of Staging Environments and Robust Testing

The experience of Cloudflare reinforces the foundational principle of utilizing dedicated staging environments for all network infrastructure changes. A staging environment is an exact replica of the production system, providing a safe sandbox where new code, configuration updates, and system modifications can be thoroughly tested under realistic conditions. This includes simulating various loads, edge cases, and failure scenarios without affecting live users.

Robust testing in these environments involves not just functional validation but also performance testing, security audits, and compatibility checks with existing systems and tools. The aim is to uncover unexpected interactions or vulnerabilities, such as the incompatibility of the testing tool with the increased buffer size in the December 5th outage, before they can impact production. Gradual rollout mechanisms, often referred to as canary deployments or dark launches, further mitigate risk by slowly introducing changes to a small subset of users or traffic, allowing for real-world validation in a controlled manner.

Ultimately, preventing outages relies on a culture that prioritizes testing and risk mitigation over speed of deployment. Companies must invest in comprehensive testing infrastructure, enforce strict deployment policies, and ensure that all changes undergo a full lifecycle of development, testing, and staging before ever touching a live production network. This disciplined approach is essential for maintaining service reliability, building customer trust, and avoiding costly disruptions in an increasingly interconnected digital landscape.