NVIDIA

Nvidia's Rubin Architecture Prioritizes Network Innovation

Nvidia's new Rubin architecture, unveiled at CES, revolutionizes AI processing by emphasizing advanced networking chips for unprecedented performance and efficiency.

- Read time

- 5 min read

- Word count

- 1,045 words

- Date

- Jan 10, 2026

Summarize with AI

Nvidia's recently unveiled Rubin architecture introduces a significant leap in AI computing, driven not just by its powerful GPU, but by an integrated suite of advanced networking chips. This new platform promises substantial reductions in inference costs and GPU requirements for training large models. Key to its innovation is the concept of 'extreme co-design,' where CPUs, GPUs, and multiple networking components work in concert. This approach expands in-network computing, offloading operations from GPUs to the network to enhance efficiency and minimize idle time across distributed AI workloads, signaling a new era in data center architecture for AI.

🌟 Non-members read here

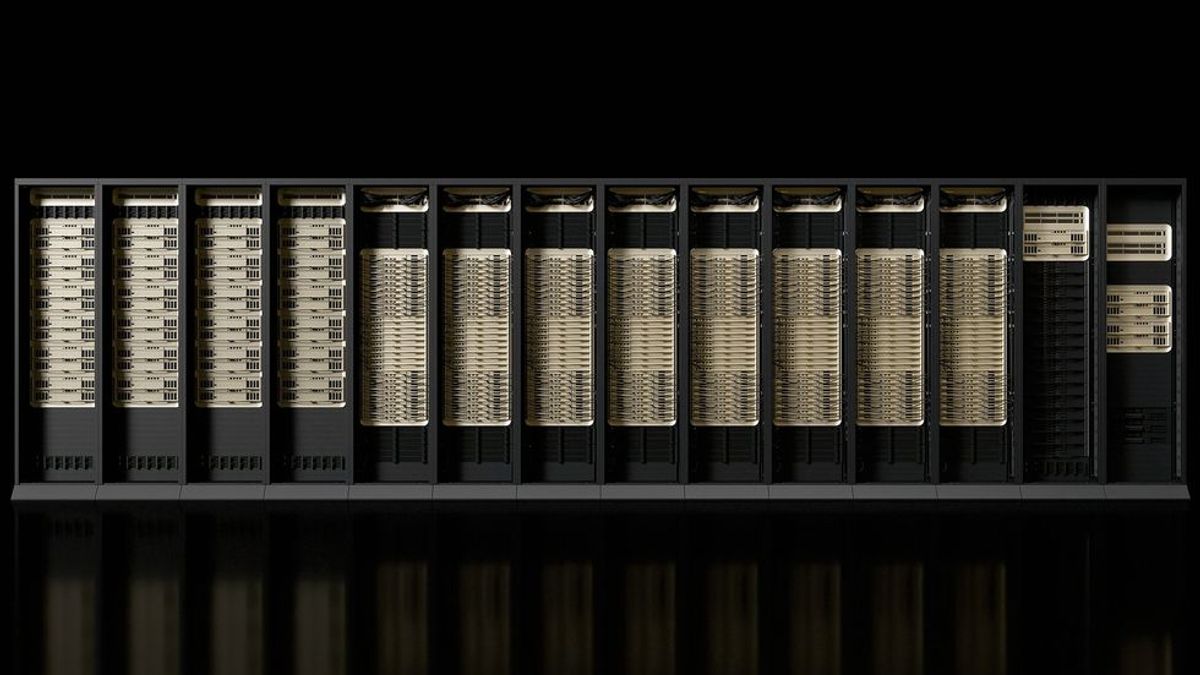

Nvidia recently unveiled its new Rubin architecture at the Consumer Electronics Show in Las Vegas, a development poised to significantly impact artificial intelligence computing. This platform, slated for customer delivery later this year, promises a tenfold reduction in inference costs and a fourfold decrease in the number of GPUs required for training specific models, compared to Nvidia’s existing Blackwell architecture. The announcement highlights a strategic shift towards comprehensive system design.

While the Rubin GPU itself is a powerhouse, achieving 50 quadrillion floating-point operations per second (petaFLOPS) for 4-bit computation in transformer-based inference workloads, its individual performance is only one piece of the puzzle. The architecture’s true innovation lies in the synergy of its components. This holistic approach is crucial for optimizing the intensive demands of modern AI.

The Rubin platform encompasses a total of six new chips, including the Vera CPU, the Rubin GPU, and four distinct networking chips. Gilad Shainer, Nvidia’s senior vice president of networking, emphasized that performance gains are achieved when these components function as an integrated whole. This “extreme co-design” philosophy suggests that the way units are interconnected is as vital as their individual capabilities, fundamentally altering performance outcomes. Such an approach underscores the increasing complexity and interconnectedness of high-performance computing systems.

Advancing Distributed AI Computing with “In-Network Compute”

Modern AI workloads, both in training and inference, increasingly rely on vast numbers of GPUs operating simultaneously. Historically, inference tasks often ran on single GPUs within a single server, but this paradigm has evolved significantly. According to Shainer, inferencing is now becoming highly distributed, spanning across multiple racks and demanding seamless communication between hundreds or even thousands of processing units. This necessitates a robust and efficient network infrastructure capable of uniting these dispersed resources.

To facilitate these large-scale distributed tasks, the objective is to make as many GPUs as possible function cohesively as a single entity. This is the primary goal of the “scale-up network,” which manages the connections between GPUs within a single rack. Nvidia addresses this critical linkage with its NVLink networking chip. The new Rubin architecture introduces the NVLink6 switch, which boasts double the bandwidth of its predecessor, the NVLink5 switch, achieving 3,600 gigabytes per second for GPU-to-GPU connections, a substantial increase from 1,800 GB/s.

Beyond the doubling of bandwidth, the scale-up chips also feature an increased number of SerDes (serializer/deserializers), which streamline data transmission over fewer wires. More importantly, these chips expand the range of calculations that can be performed directly within the network. Shainer clarified that the scale-up network is not merely a data conduit but a sophisticated computing infrastructure. It actively performs certain operations on the switch itself, directly integrating computational tasks into the data flow.

The rationale behind offloading operations from GPUs to the network is twofold, enhancing both efficiency and speed. Firstly, it allows certain tasks to be executed just once, eliminating the need for every GPU to perform the same computation redundantly. A prime example is the “all-reduce” operation in AI training. During this process, each GPU computes a gradient based on its own data batch. For accurate model training, all GPUs must collectively know the average gradient across all batches. Rather than each GPU sending its gradient to every other GPU and then independently calculating the average, performing this averaging operation once within the network significantly conserves computational time and power.

Secondly, in-network computing helps mask the latency involved in data movement between GPUs by processing data en-route. Shainer used an analogy of a pizza parlor aiming to reduce delivery times. Adding more ovens or workers might increase pizza production, but it doesn’t shorten the time for a single pizza. However, if an oven were placed in a car, allowing the pizza to bake during transit, significant time savings would be realized. This illustrates how computations performed as data travels between GPUs can dramatically reduce overall processing time. While in-network computing has been a feature of Nvidia’s architecture since around 2016, this iteration broadens the scope of computations that can be handled within the network, supporting a wider array of workloads and numerical formats.

Enhancing Connectivity: Scaling Out and Across Data Centers

The remaining networking chips within the Rubin architecture form the “scale-out network,” responsible for interconnecting different racks within the data center. This critical component ensures seamless communication and collaboration across larger hardware deployments. These chips include the ConnectX-9, a high-performance networking interface card designed for robust data transfer.

Another key component is the BlueField-4, a specialized data processing unit. This unit is strategically paired with two Vera CPUs and a ConnectX-9 card, creating a powerful combination dedicated to offloading demanding networking, storage, and security tasks from the main processing units. This offloading capability frees up GPUs and CPUs to focus on core AI computations, boosting overall system efficiency.

Completing the scale-out network is the Spectrum-6 Ethernet switch, which leverages co-packaged optics to facilitate high-speed data transmission between racks. This new Ethernet switch not only doubles the bandwidth of previous generations but also significantly minimizes “jitter.” Jitter, defined as the variation in arrival times of information packets, is a critical factor in distributed computing. The presence of jitter means that if different racks are performing distinct parts of a calculation, their results will arrive at varying times. This leads to costly idle periods for faster racks as they await the slowest packet, effectively translating jitter directly into financial losses due to underutilized expensive equipment. The Spectrum-6 aims to eliminate these inefficiencies by ensuring predictable and synchronized data delivery across the data center.

While Nvidia’s current suite of new chips primarily focuses on intra-data center connectivity, the company recognizes the expanding demands of modern AI. None of the new chips are specifically designed for “scale-across” connections, which link multiple data centers together. However, Shainer emphasized that this represents the next frontier in AI infrastructure. He noted that even 100,000 GPUs are becoming insufficient for certain advanced workloads, driving an urgent need to connect entire data centers to form even larger, more powerful computational networks. This foresight suggests that future iterations of Nvidia’s architecture will likely address the challenges of inter-data center communication, further pushing the boundaries of distributed AI processing. The continuous evolution in networking capabilities is essential for keeping pace with the exponential growth in AI model complexity and data volume.