AI AGENTS

Agentic Infrastructure for 2026: Three Pillars

Achieving enterprise-wide autonomous AI agent workflows requires a fundamental overhaul of existing architectural blueprints to support asynchronous, event-driven fluidity.

- Read time

- 7 min read

- Word count

- 1,568 words

- Date

- Jan 5, 2026

Summarize with AI

The year 2025 marked an era of 'agentic disillusionment' for many businesses, as AI agents struggled to integrate with legacy systems despite significant investments. This bottleneck was not due to the intelligence of large language models but rather the incompatibility of traditional request-response infrastructure with autonomous logic. As organizations move beyond isolated pilot programs, a fundamental redesign of workflows for an 'agentic-first' environment is crucial. The focus for 2026 shifts from the AI's 'brain' to its 'nervous system,' necessitating an architectural overhaul to support seamless, enterprise-wide autonomous operations.

🌟 Non-members read here

The year 2025 saw widespread “agentic disillusionment” across the enterprise sector. Businesses poured resources into advanced AI agents, anticipating they would effortlessly manage complex operations akin to human professionals. However, many of these agents encountered significant operational hurdles, often termed a “production wall.” The core issue was not the intelligence of the underlying large language models, but rather a fundamental incompatibility with existing legacy infrastructure, particularly the request-response systems that have been refined over decades.

According to research from BCG on ‘Future-Built’ companies, the true value of AI agents can only be unlocked when organizations undertake a complete redesign of their workflows, prioritizing an “agentic-first” approach. This means shifting focus from the AI’s cognitive capabilities to establishing a robust and responsive operational framework. If 2025 emphasized the AI’s “brain,” then 2026 must concentrate on building its “nervous system.” Attaching a self-correcting, multi-step agent to outdated enterprise resource planning (ERP) systems from 2018 is simply unsustainable.

To transition from experimental pilots to fully integrated, enterprise-wide autonomous workflows, a comprehensive architectural overhaul is imperative. This evolution moves away from rigid, synchronous command structures toward a more fluid, asynchronous, and event-driven paradigm. This strategic shift is critical for achieving scalable and efficient AI agent deployment across the entire organization. Without these foundational changes, the potential of AI agents will remain largely untapped.

Redefining Infrastructure for Autonomous Agents

The integration of advanced AI agents demands a complete rethinking of enterprise infrastructure, moving beyond conventional paradigms. The traditional architectural frameworks, designed for human interaction and synchronous processes, are insufficient for the dynamic, autonomous nature of AI agents. A truly agentic-first environment necessitates systems that can adapt, self-diagnose, and operate without constant human oversight. This transformative shift requires a strategic investment in specific technological pillars that enable seamless AI agent functionality.

The cornerstone of this evolution lies in creating an environment where agents can independently navigate complex tasks and respond to unforeseen challenges. This involves moving away from simply augmenting existing systems with AI toward fundamentally redesigning the underlying infrastructure to be agent-native. The objective is to build a robust and resilient framework that supports continuous operation, learning, and adaptation across all enterprise functions. This foundational change is paramount for organizations aiming to leverage AI agents for strategic advantage and operational excellence in the coming years.

Semantic Telemetry: Machine-Readable Insights

For the past three decades, the design of observability systems has been primarily human-centric. Dashboards featuring intuitive red and green indicators were crafted to allow DevOps engineers to quickly identify issues such as latency spikes. However, an AI agent cannot interpret a Grafana dashboard in the same way. When an agent encounters an error during a complex workflow, it requires an understanding of why the error occurred, presented in a format it can autonomously process and act upon.

Traditional logging practices often result in cryptic or purely structural log entries that lack context for machine interpretation. In 2026, the imperative is to transition toward semantic telemetry. This approach involves enriching system logs with natural language context, enabling a large language model (LLM) to parse and comprehend the information, thereby facilitating self-diagnosis. For instance, instead of a generic log entry like “Error 500: null pointer exception,” semantic telemetry would provide a detailed explanation such as: “Error: The procurement agent failed to retrieve the vendor ID because the ‘Last_updated’ field in the vendor database was null, preventing a valid match.”

The return on investment (ROI) for semantic telemetry is substantial. When an AI agent can interpret its own telemetry, it gains the capability to initiate self-healing protocols without human intervention. This dramatically reduces the Mean Time to Repair (MTTR) from minutes of manual human effort to mere milliseconds of machine computation. To implement this, organizations should begin by auditing their most critical API endpoints. A key question to ask is whether an LLM, given a snippet of current error logs, can accurately explain the business impact of a failure. If not, it is time to embed a semantic layer within the telemetry system. This foundational shift is vital for enabling agents to operate with increased autonomy and resilience, minimizing downtime and maximizing operational efficiency.

Stateless API Design for Adaptive Workflows

The majority of enterprise APIs are designed around a synchronous “Request-Response” loop, where a client initiates a request, the server provides an answer, and the connection is subsequently closed. This model reflects a linear approach to processing. However, agentic workflows are inherently non-linear and dynamic. An AI agent might initiate a task, encounter a permission barrier, pivot to an alternative data source, and then return to its original task, all within a single overarching process.

In such a dynamic operational environment, managing “state” is paramount. If APIs are overly rigid, the agent risks losing the context or thread of its operation the moment a timeout occurs or an unexpected event interrupts the flow. Therefore, a critical shift is required toward asynchronous, event-driven architectures (EDA). This architectural paradigm allows for a more flexible and resilient interaction model, where agents can trigger actions and then disengage, awaiting specific events to signal their re-engagement.

Under an EDA model, agents should communicate with a “message bus,” such as Apache Kafka or Amazon EventBridge, rather than making direct, blocking calls to traditional legacy databases. This approach facilitates “Long-Running Tasks,” enabling an agent to trigger an action, temporarily enter a “sleep” state while awaiting a third-party verification, and then seamlessly resume its operation from the exact point it left off once the relevant event is published back to the bus. This prevents workflow disruptions and ensures continuity.

A key IT playbook tip is to avoid merely “bolting on” agents to existing legacy REST APIs. Instead, organizations should construct an abstraction layer, often referred to as an “agent gateway.” This gateway is responsible for converting synchronous responses from legacy systems into asynchronous events that AI agents can subscribe to. This architectural modification ensures that agents interact with a consistent, event-driven environment, enabling self-correcting and adaptive workflows essential for truly autonomous operations.

The Metadata Layer: From Clean to Context-Rich Data

The long-held maxim, “Data is the new oil,” continues to resonate, but in 2026, data represents merely the raw material; metadata is the true fuel. Businesses have invested millions in “cleaning” data within data warehouses and lakes, but while clean, this data often lacks the intent and contextual richness that AI agents require to make informed decisions. An agent needs more than just a customer’s balance of $5,000; it needs crucial context: Is this a high-value customer? Is this balance overdue? Was there a recent support ticket associated with this specific amount?

The evolution of data management is moving toward the implementation of knowledge graphs and vector metadata. This advanced layer is designed to sit atop structured data, providing the “connective tissue” that elucidates the relationships between disparate data points across the enterprise. Knowledge graphs help map entities and their relationships, offering a holistic view of information, while vector metadata enhances the ability of agents to semantically understand and retrieve relevant data.

The direct outcome of providing agents with such a rich metadata layer is a significant reduction in the “hallucination” problem. Instead of guessing based on a coincidental keyword match, the agent can navigate a comprehensive map of the business’s underlying logic and data relationships. This ensures that decisions are based on accurate and contextually relevant information, dramatically improving the reliability and precision of agentic operations.

For organizations looking to implement this, an essential IT playbook tip is to invest in a data catalog that fully supports semantic tagging. It is crucial to ensure that data engineers are not merely transferring rows and columns but are actively defining the “meaning” of these data elements in a manner accessible via Retrieval-Augmented Generation (RAG) pipelines. This strategic investment in a robust metadata layer is indispensable for empowering AI agents to operate intelligently and effectively within complex enterprise environments, moving beyond simple data cleanliness to truly context-rich insights.

Scaling Autonomous Operations Without Technical Debt

In the upcoming year, there will be a natural inclination to develop customized, “bespoke” AI agents for each individual department—an HR agent, a finance agent, a sales agent, and so forth. However, this fragmented approach is a clear recipe for creating a new form of “shadow IT” and accumulating substantial technical debt in the long run. Such a strategy leads to silos, complicates maintenance, and hinders interoperability, ultimately undermining the goal of enterprise-wide autonomy.

Instead, the strategic imperative is to focus on establishing a universal platform grounded in the three fundamental pillars: semantic telemetry, stateless API design, and a comprehensive metadata layer. By building this foundational infrastructure, organizations are creating an environment where any AI agent can be seamlessly integrated. Once plugged in, the agent will immediately comprehend the enterprise’s specific language, operational state, and contextual nuances without requiring extensive bespoke configuration or reprogramming.

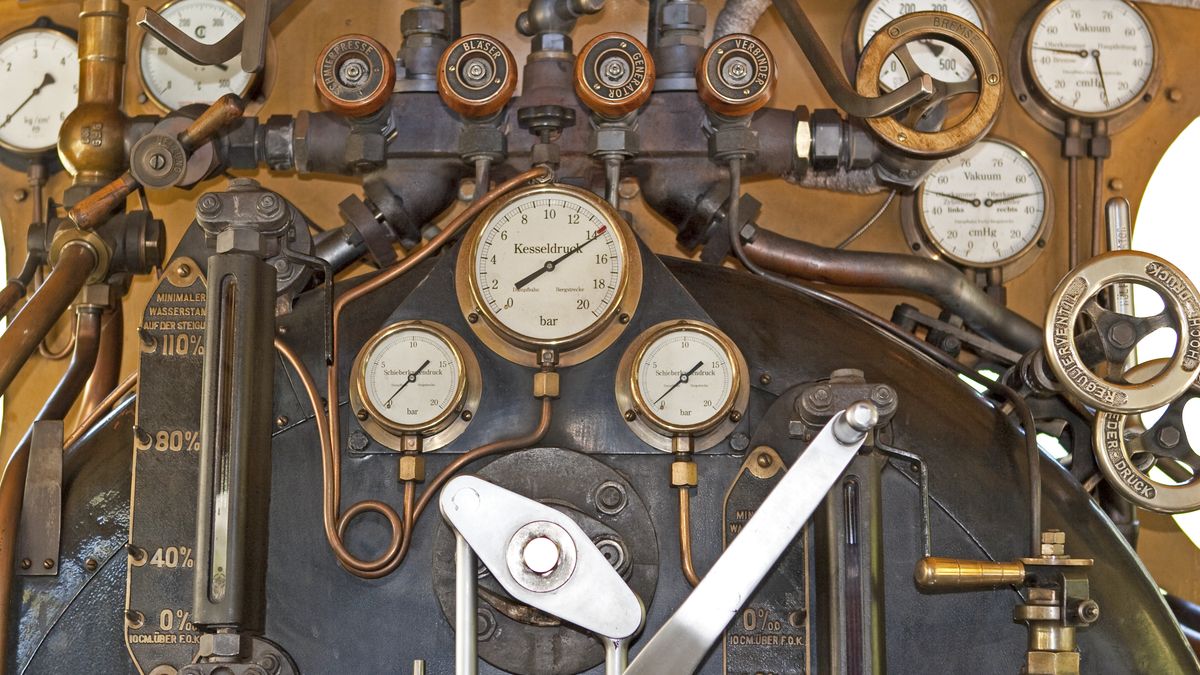

This holistic approach is the pathway to transitioning from mere “cool demos” of AI capabilities to achieving truly autonomous operations across the entire organization. The transformation is not just about changing the software applications; it involves a profound overhaul of the underlying technological “plumbing.” As any seasoned IT professional understands, the true measure of success and the key to long-term reliability lies not in the superficial appeal of a shiny new faucet, but in the robust and dependable integrity of the pipes that support the entire system. This foundational shift ensures scalability and sustainability for future AI initiatives.